Field: Strukturomvandling

AI policy for competitiveness

The last two decades have witnessed major advances in Artificial intelligence (AI). It is expected to become even more influential, as many new studies are predicting that AI will transform work and business life around the world. In times of rapid change, policy makers need guidance, to prepare for, and effectively respond to, developments that may be unprecedented.

AI refers to systems that display intelligent behaviour by analysing their environment and taking actions, with some degree of autonomy. Applications include self-driving cars, self-improving robots, recommendation systems, and chatbots.

AI policy is a new policy area that has received more attention internationally in recent years. Policy makers are increasingly focusing on challenges stemming from policy complexity. This is illustrated by using the term “policy mix”, which implies a focus on the interactions between different policy instruments, as they effect the extent to which AI policy objectives are implemented. The purpose of this paper is to identify the policy instruments that make up the Swedish AI policy mix and to illustrate how it is implemented. This report address the following research questions:

- What are the policy instruments that make up the Swedish AI policy mix and how is this mix imple

- mented?Which regulatory aspects of AI-systems do the policy makers need to take under consideration?

The AI policy goal is ambitious

The Swedish government writes that countries that succeed in harnessing the benefits of AI, while managing the risks in a responsible manner, will have a great competitive advantage internationally. The Government’s goal is to:

”Make Sweden a leader in harnessing the opportunities that the use of AI can offer, with the aim of strengthening Sweden’s welfare and competitiveness.”

Swedish AI statistics however show that Sweden is not a world- or even a European leader in harnessing the benefits of AI. We also observe:

- An AI divide between small and large firms

- Cost s the biggest barrier that prevents firms from using AI

- About one third of firms say that they do not know whether AI ethics or legal issues are an obstacle.

The Swedish AI policy mix

The Swedish government’s goal is to be a leader in harnessing the opportunities AI offers. We observe that it is not a clear goal. It is a high-level motivational goal that non-identified implementers of AI policy can strive for. A goal that gives all implementers of AI policy manoeuvring space. Implementers, that out of their own free will, can reallocate within an existing budget to launch AI support instruments. The AI policy design can be described as collaborative governance where the AI policy mix is designed by the implementers in boundary spanning collaboration.

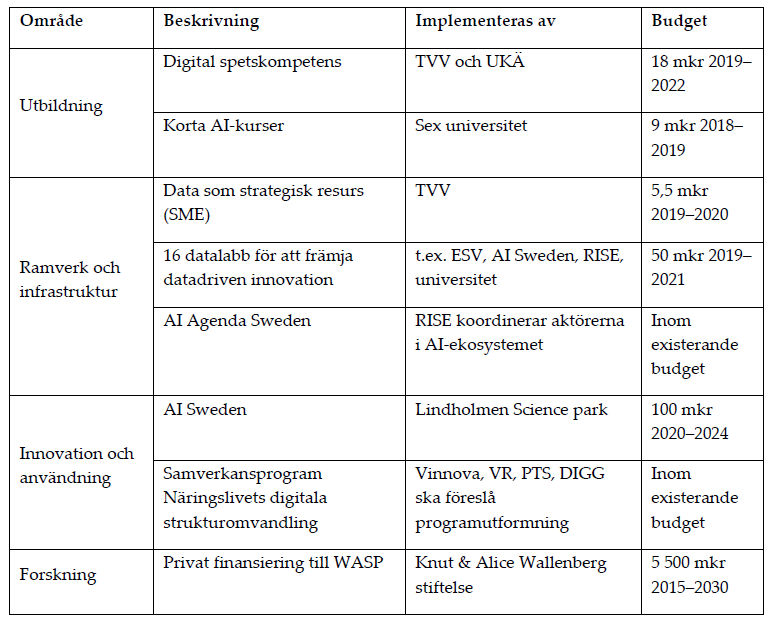

Swedish AI policy emphasise, that in order to realise the potential with AI, four conditions are important: innovation and use, research, education, as well as framework and infrastructure. The policy instruments that make up the Swedish AI policy mix are identified in the table below.

Table Identified support instruments in the Swedish AI policy mix

Navigating a complex regulatory landscape

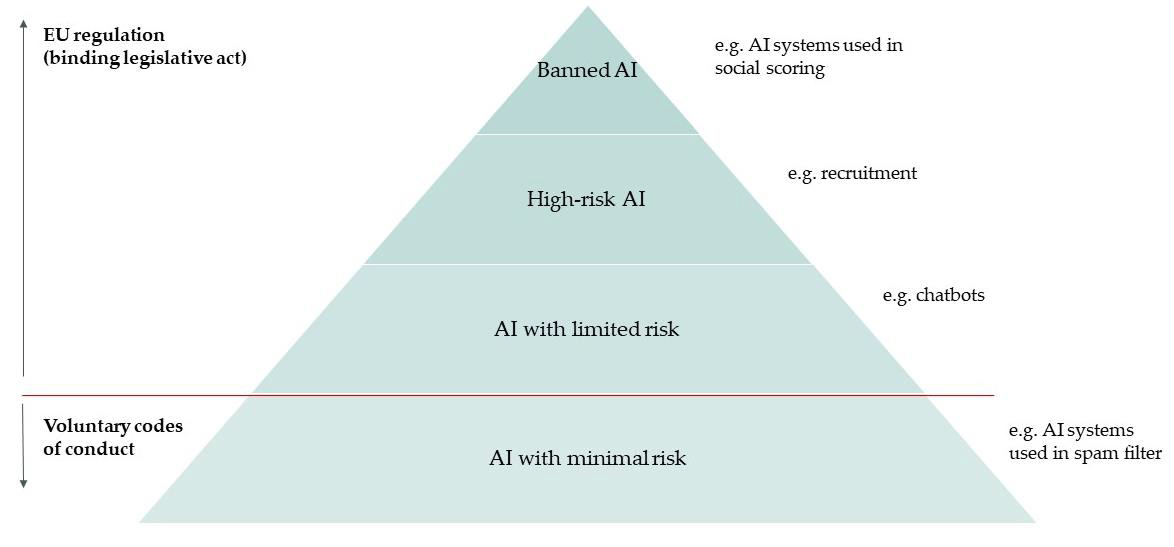

As AI diffuses rapidly, firms and policy makers need to understand AI systems’ impact on policy and especially an emerging complex AI regulatory landscape. The European Union has proposed a new AI regulatory framework that will apply to all AI developers, deployers and users. This regulation follows a risk-based approach and defines four risk levels and could enter into force in 2022. The AI systems that are considered a clear threat to the safety and rights of people will be banned. AI systems classified as high-risk must comply with mandatory requirements and follow conformity assessment procedures before being placed on the market. An example of high-risk AI systems is a system for recruitment. For AI systems with limited risk, only minimum transparency obligations are proposed when for example chatbots or ‘deep fakes’ are used. Finally, AI systems with minimal risk can be used freely, e.g. AI-enabled spam filters. Firms that develop, deploy or use AI systems that are not classified as high risk could choose to adopt a code of conduct to ensure that their AI systems are trustworthy. This code of conducts is voluntary and have no binding force.

Risk categories in the EU proposal for AI regulation. Source: Adaption based on EC (2021c).

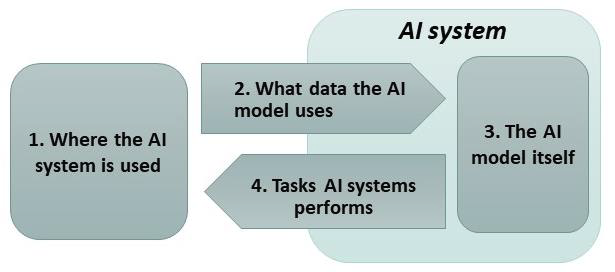

To deepen the knowledge of the EU regulations firms must follow when they develop, deploy, use and procure AI systems, the figure below focuses on where the AI system is used, what data the AI model uses, the AI model itself and finally the tasks that AI systems perform.

Figure Conceptual framework to understand the new proposal for AI regulation based on how AI systems works.

The newly proposed AI regulation apply to all firms that develop, deploy, use and procure AI systems in the EU. AI systems classified as high-risk must comply with mandatory requirements for data governance and management practices covering high quality training, testing and validation data sets. The AI model itself must be as transparent and explainable as possible. The tasks the AI system performs are also regulated. When using AI systems with limited risk such as chatbots, users must be aware that they are interacting with a machine so they can take an informed decision to continue or step back.

Publicerad:

AI policy for competitiveness

Serial number: Rapport AU 2022:02:01

Reference number: 2020/257

Download the report in Swedish Pdf, 2.1 MB.

Download summary Pdf, 566.3 kB.